AI Student Helper

🚀 Introduction

AI Student Helper is a multi-agent automation system built with LangGraph to assist students and professionals in automating academic and career-related tasks. It follows a graph-based workflow execution model, ensuring efficient task routing and execution.

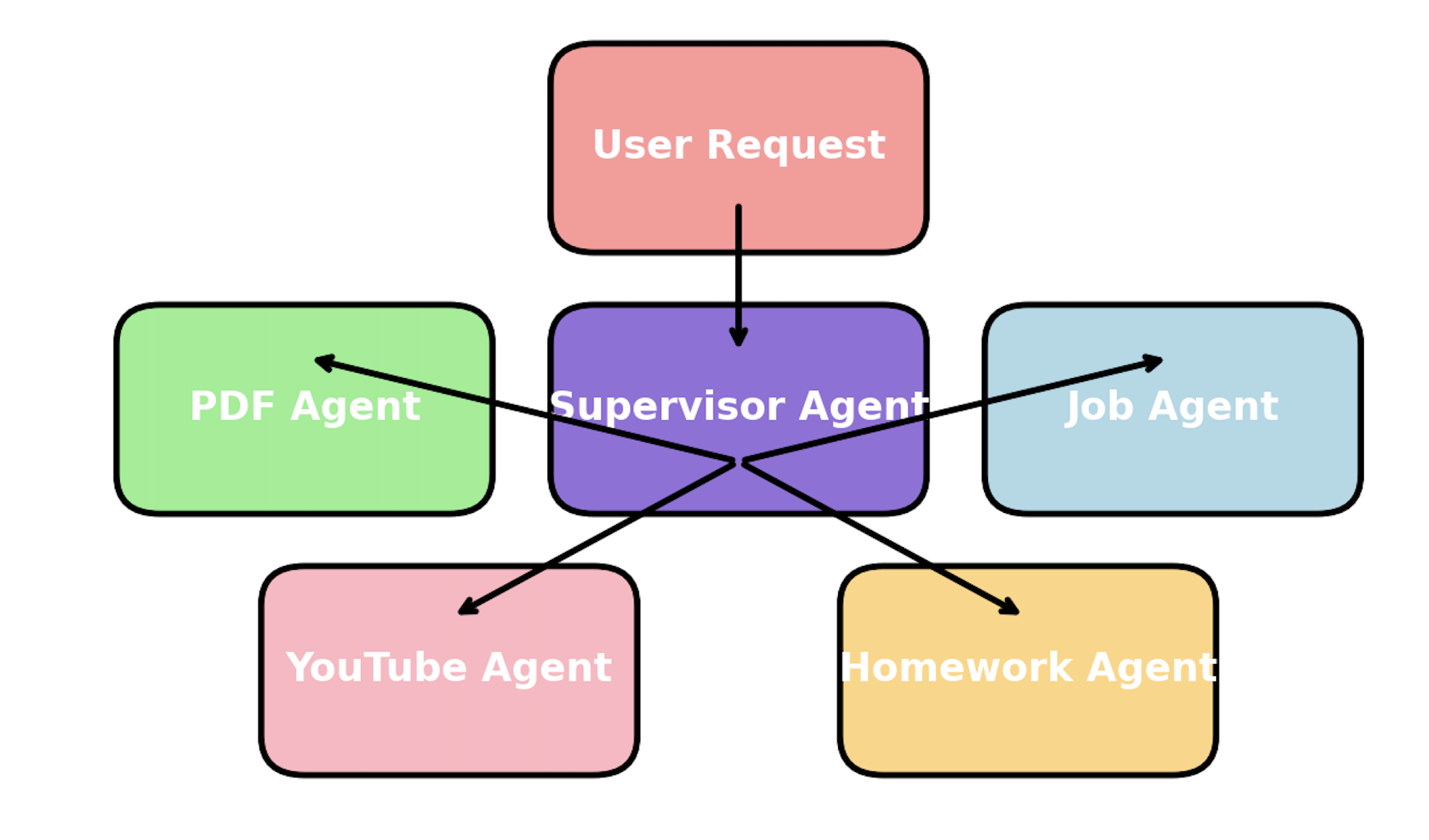

The AI Student Helper follows a modular multi-agent architecture, where:

- A Supervisor Agent acts as a task router.

- Specialized Agents execute specific tasks based on user input.

- A graph-based workflow dynamically determines execution paths.

🚀 System Architecture

🚀 High Level Diagram

🚀 Technologies Used

| Technology | Role |

|---|---|

| Python | Core programming language |

| LangGraph | Manages workflow execution |

| ChatOpenAI | Provides AI-powered responses |

| Streamlit | Enables interactive UI |

| Selenium | Automates job applications |

| pytube | Extracts YouTube video transcripts |

| PyMuPDF | Parses PDF files |

🚀 5. Workflow Execution

📌 5.1 Request Handling Flow

- User enters a request (e.g., "summarize a PDF").

- Supervisor Agent extracts intent.

- Relevant agent is activated.

- Task is processed, and output is generated.

- Response is returned to the user.

📌 5.2 Graph Execution with LangGraph

- Each node in the graph represents an agent.

- The edges define execution logic based on user input.

- This structure allows dynamic branching without unnecessary processing.

🚀 Key Components

📌 Supervisor Agent

- Routes user requests to the correct specialized agent, analyzes user input using an LLM, triggers corresponding agents, and returns processed results.

📌 Specialized Agents

📄 PDF Summarizer (pdf_agent)

- Prompts the user for a PDF file path.

- Extracts text content using

PyMuPDForpdfplumber. - Uses an LLM-based summarization function to generate key points.

- Returns a structured summary.

Click to know in detail architecture.

Technical Documentation: PDF Analyzer

1. Overview

The PDF Analyzer is an AI-powered system that extracts text from PDF documents and analyzes its content using OpenAI's GPT-based models. It creates structured knowledge bases and provides summarized insights.

2. System Architecture

- Uses OpenAI's LLM models for text analysis.

- Extracts content using PyMuPDF (fitz) for efficient PDF text parsing.

- Stores extracted knowledge in structured JSON format.

- Summarizes processed text using OpenAI-based summarization.

3. Dependencies

from pathlib import Path

from pydantic import BaseModel

import json

from openai import OpenAI

import fitz # PyMuPDF

from termcolor import colored

4. Configuration Constants

BASE_DIR = Path("book_analysis")

PDF_DIR = BASE_DIR / "pdfs"

KNOWLEDGE_DIR = BASE_DIR / "knowledge_bases"

SUMMARIES_DIR = BASE_DIR / "summaries"

ANALYSIS_INTERVAL = 1

MODEL = "gpt-4o-mini"

ANALYSIS_MODEL = "o1-mini"

TEST_PAGES = 160

5. Class Breakdown

5.1 PageContent

class PageContent(BaseModel):

has_content: bool

knowledge: list[str]

5.2 PDFAnalyzer

class PDFAnalyzer:

def __init__(self, pdf_name: str, test_pages: int = TEST_PAGES):

self.PDF_NAME = pdf_name

self.PDF_PATH = Path(pdf_name) if Path(pdf_name).is_absolute() else PDF_DIR / pdf_name

self.OUTPUT_PATH = KNOWLEDGE_DIR / f"{self.PDF_NAME.replace('.pdf', '_knowledge.json')}"

self.TEST_PAGES = test_pages

self.client = OpenAI()

6. Workflow Execution

- User provides a PDF file for processing.

- PDFAnalyzer extracts text using PyMuPDF.

- Content is analyzed and converted into structured knowledge.

- Summarization is performed using OpenAI's GPT model.

- Summarized insights are stored and displayed.

7. Key Methods

7.1 Setting Up Directories

def setup_directories(self):

"""Ensure necessary directories exist and check the PDF file."""

for directory in [PDF_DIR, KNOWLEDGE_DIR, SUMMARIES_DIR]:

directory.mkdir(parents=True, exist_ok=True)

if not self.PDF_PATH.exists():

raise FileNotFoundError(f"PDF file '{self.PDF_PATH}' not found")

7.2 Extracting Knowledge

def process_page(self, page_text: str, current_knowledge: list[str], page_num: int) -> list[str]:

"""Process a single page using OpenAI to extract knowledge."""

completion = self.client.beta.chat.completions.parse(

model=MODEL,

messages=[

{"role": "system", "content": "Analyze this page as if you're studying from a book."},

{"role": "user", "content": f"Page text: {page_text}"}

],

response_format=PageContent

)

result = completion.choices[0].message.parsed

return result.knowledge if result.has_content else []

7.3 Analyzing Extracted Knowledge

def analyze_knowledge_base(self, knowledge_base: list[str]) -> str:

"""Generate a final book analysis from extracted knowledge."""

completion = self.client.chat.completions.create(

model=MODEL,

messages=[

{"role": "system", "content": "Summarize the extracted knowledge in markdown format"},

{"role": "user", "content": "\n".join(knowledge_base)}

]

)

return completion.choices[0].message.content

7.4 Saving Summarized Content

def save_summary(self, summary: str, is_final: bool = False):

"""Save the generated summary as a Markdown file."""

summary_path = SUMMARIES_DIR / f"{self.PDF_NAME.replace('.pdf', '')}_{'final' if is_final else 'interval'}.md"

with open(summary_path, 'w', encoding='utf-8') as f:

f.write(summary)

print(f"✅ Summary saved to: {summary_path}")

8. Conclusion

The PDF Analyzer is an AI-driven tool designed to extract meaningful insights from books and research papers. It efficiently integrates OpenAI's models, NLP processing, and structured knowledge extraction to streamline the analysis of complex PDFs.

🚀 Optimized for accuracy, efficiency, and automation! 🚀

🎥 YouTube Video Summarizer (youtube_agent)

- Uses

pytubeto download transcripts (if available). - If no transcript is available, leverages Whisper for speech-to-text conversion.

- Summarizes content using a LangGraph-based AI model.

Click to know in detail architecture.

Technical Documentation: Downloads and transcribes YouTube videos

1. Overview

The Video Summarizer System is an AI-powered solution that extracts, processes, and summarizes spoken content from YouTube videos. It leverages speech-to-text transcription, natural language processing (NLP), and LLM-based summarization for efficient video content analysis.

2. System Architecture

- SourceConfig – Handles video source configurations and settings.

- Transcriber – Extracts and processes audio from YouTube videos.

- Summarizer – Sends transcriptions to OpenAI's GPT model for summarization.

- Concurrency Handling – Implements multi-threaded processing for efficiency.

- File Management – Stores transcription and summary outputs.

3. Dependencies

subprocess - Executes system commands

re - Performs regex-based text parsing and cleaning

os - Handles file operations and environment variable management

json - Reads and writes structured JSON data

dotenv - Loads API keys and environment variables

youtube_transcript_api - Fetches YouTube captions automatically

openai - Calls GPT-based models for summarization

pytubefix - Downloads YouTube videos/audio streams

concurrent.futures - Manages multi-threaded execution

time - Handles execution delays and error handling

4. Class Breakdown

4.1 SourceConfig

class SourceConfig:

def __init__(self, source_type="YouTube Video", source_url=""):

self.type = source_type

self.url = source_url

self.use_youtube_captions = True

self.language = "auto"

self.transcription_method = "Cloud Whisper"

4.2 Transcriber

class Transcriber:

def __init__(self, source_config: SourceConfig):

self.source_config = source_config

self.transcription_text = ""

self.transcript_file_name = ""

4.3 Summarizer

class Summarizer:

def __init__(self, transcriber: Transcriber, model="gpt-4o-mini"):

self.transcriber = transcriber

self.model = model

self.client = openai.OpenAI(api_key=self.transcriber.get_api_key())

5. Workflow Execution

- User inputs a YouTube video URL.

- Supervisor module initializes SourceConfig.

- Transcriber processes video/audio.

- Text is pre-processed and split into chunks.

- Summarizer sends text to OpenAI API.

- Summarized text is saved to a file.

6. Deployment & Setup

Installation

pip install -r requirements.txtRunning the Application

python VideoSummarizer.pyRequired Environment Variables

export OPENAI_API_KEY="your_openai_api_key"7. Performance Optimization

Concurrency with Multi-threading

with concurrent.futures.ThreadPoolExecutor(max_workers=self.parallel_api_calls) as executor:

future_to_chunk = {executor.submit(self.summarize, text_chunk): idx for idx, text_chunk in enumerate(cleaned_texts)}

8. Error Handling

except FileNotFoundError:

print("⚠️ Error: prompts.json file not found. Using default prompt.")

9. Future Enhancements

- Support for multilingual transcription.

- Advanced NLP summarization using fine-tuned LLMs.

- Integration with cloud storage (AWS S3, Google Drive).

10. Conclusion

The Video Summarizer System is a powerful AI-driven tool that automates video-to-text summarization. With multi-threaded execution, optimized processing pipelines, and LLM integration, it efficiently extracts and summarizes content, making it ideal for content creators, researchers, and students.

🚀 Optimized for speed, accuracy, and scalability! 🚀

💼 Job Application Assistant (job_agent)

- Extracts resume information.

- Uses

SeleniumorLinkedIn APIto automate job applications. - Matches jobs based on LLM-powered profile analysis.

Click to know in detail architecture.

🛠️ Technical Documentation - LinkedIn Easy Apply Bot

1️⃣ System Overview

This system is a Python-based automated job application bot that interfaces with LinkedIn's Easy Apply feature, executing headless web automation via Selenium WebDriver to dynamically interact with LinkedIn's web elements and apply for job listings based on pre-configured user preferences.

2️⃣ System Architecture

- 📜

config.yaml: Persistent configuration store defining job search parameters, application filters, and user authentication details. - 🔧

linkedineasyapply.py: Implements the bot execution logic, handling authentication, job filtering, and automated form submission using XPath selectors. - 🚀

main.py: Entry-point script responsible for orchestration, invoking the LinkedIn bot, and handling execution flow.

3️⃣ Configuration Management - config.yaml

The config.yaml file is a structured hierarchical data model defining key operational parameters:

email: "user@example.com"

password: "securepassword"

remote: true

jobTypes:

full-time: true

contract: true

experienceLevel:

entry: true

mid-senior level: false

uploads:

resume: "/path/to/resume.pdf"

4️⃣ Core Functionalities - linkedineasyapply.py

This module is responsible for:

- 🔐 User Authentication via Selenium, handling 2FA bypass mechanisms.

- 📡 Web Scraping & DOM Navigation using XPath selectors and CSS locators.

- 🧠 Job Relevance Filtering based on configuration heuristics.

- 🤖 Automated Form Filling leveraging Selenium WebDriver.

- 📜 Logging & Error Handling using a robust exception handling pipeline.

5️⃣ Execution Workflow

The execution flow of the bot follows a finite-state machine (FSM) model:

- 🛠️ Initialization Phase - Load user parameters, configure session settings.

- 🔎 Job Discovery Module - Execute LinkedIn search queries using dynamic query formulation.

- 📡 Real-time Scraping & Job Filtering - Parse job postings and validate criteria.

- 🤖 Automated Application Submission - Simulate user actions via Selenium WebDriver.

- 📊 Logging & Post-Execution Analysis - Record applied jobs, handle exceptions, generate reports.

7️⃣ Key Technologies

- 🌐 Selenium WebDriver: Automates browser interactions.

- 📊 BeautifulSoup: Parses and extracts job listing data.

- ⚡ PyYAML: Handles structured configuration management.

- 📜 XPath Selectors: Enables precision DOM element selection.

8️⃣ Key Challenges & Solutions

- 🔐 LinkedIn CAPTCHA Challenges - Implemented human-mimicking delays & randomized interaction timing.

- 🕵️ Dynamic Page Structure Changes - Utilized XPath wildcard expressions & CSS selector fallback.

- 📡 Asynchronous Job Loading Issues - Deployed wait-based strategies with implicit & explicit waits.

9️⃣ Performance Optimization

- 🚀 Parallel Execution - Utilized multi-threaded job searching.

- 🔄 Memory Optimization - Implemented garbage collection & lazy evaluation.

- 📡 Network Efficiency - Enabled cache-control for preloaded LinkedIn elements.

🔟 Conclusion

The LinkedIn Easy Apply Bot streamlines job applications through high-performance web automation, allowing users to apply for relevant roles with minimal manual intervention. Advanced error handling, job filtering, and AI-driven decision-making ensure a smooth and effective application process.

📚 Homework Helper (homework_agent)

- Uses Streamlit for an interactive UI.

- Implements LLM-based step-by-step problem-solving.

- Provides explainable responses for better understanding.

Click to know in detail architecture.

Technical Documentation: AI Homework Helper

1. Overview

The AI Homework Helper is a web-based application that uses OpenAI's GPT models to assist students with homework. It provides clarification, detailed solutions, quality assurance, and concise answers to academic questions.

2. System Architecture

- Uses OpenAI API for AI-powered responses.

- Built with Streamlit for an interactive user interface.

- Modular architecture with separate agents for different tasks.

- Custom styling for an intuitive UI experience.

3. Dependencies

import os

import openai

import streamlit as st

4. Agents Module (agents.py)

4.1 Clarification Agent

Asks the student to clarify their question before providing a solution.

def clarification_agent(question):

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"A student asked: '{question}'\\n\\nIs the question clear? If not, ask for more details:"}

]

)

return response.choices[0].message.content.strip()

4.2 Solution Agent

Provides a detailed explanation for the given question.

def solution_agent(question):

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"Provide a detailed solution to the following question: {question}"}

]

)

return response.choices[0].message.content.strip()

4.3 Quality Assurance Agent

Checks the accuracy and clarity of a generated solution.

def quality_assurance_agent(solution_text):

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a quality assurance assistant."},

{"role": "user", "content": f"Review the following solution and check for accuracy and clarity: {solution_text}"}

]

)

return response.choices[0].message.content.strip()

4.4 Concise Answer Agent

Generates a short, to-the-point answer for quick reference.

def concise_answer_agent(question):

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "Provide a short, precise answer."},

{"role": "user", "content": f"Provide a concise answer to: {question}"}

]

)

return response.choices[0].message.content.strip()

5. Streamlit Application (streamlit_app.py)

5.1 Application UI

Streamlit is used to build a user-friendly interface with interactive buttons and text areas.

import streamlit as st

from agents import clarification_agent, solution_agent, quality_assurance_agent, concise_answer_agent

def main():

st.set_page_config(page_title="Homework Helper", page_icon=":books:", layout="wide")

st.title("📚 AI Homework Helper")

question = st.text_area("Enter your question here:")

if st.button("Get Clarification"):

st.write(clarification_agent(question))

if st.button("Get Solution"):

st.write(solution_agent(question))

if st.button("Check Quality"):

solution = solution_agent(question)

st.write(quality_assurance_agent(solution))

if st.button("Get Concise Answer"):

st.write(concise_answer_agent(question))

if __name__ == "__main__":

main()

5.2 Custom Styling

Enhances the UI experience with Streamlit's markdown styling.

st.markdown("""

""", unsafe_allow_html=True)

6. Deployment Instructions

Installation

pip install -r requirements.txtRun Application

streamlit run streamlit_app.py7. Conclusion

The AI Homework Helper efficiently uses OpenAI's models to assist students with homework by providing clear, structured, and verified answers. The modular design ensures maintainability and scalability for future enhancements.

🚀 Optimized for speed, accuracy, and user-friendly experience! 🚀

🚀 Deployment

To install and run:

git clone https://github.com/your-repo/ai-student-helper.git

cd ai-student-helper

pip install -r requirements.txt

python3 SupervisorLangGraph.py